Another one of Elgg‘s less documented but very powerful features is the ability to expose functionality from the core and user modules in a standard way via a REST like API.

Another one of Elgg‘s less documented but very powerful features is the ability to expose functionality from the core and user modules in a standard way via a REST like API.

This gives you the opportunity to develop interoperable web services and provide them to the users of your site, all in a standardised way.

The endpoint

To make an API call you must direct your query at a special URL. This query will be either a GET or a POST query (depending on the command you are executing), the specific endpoint you use depends on the format you want the return value returned in.

The endpoint:

http://yoursite.com/pg/api/[protocol]/[return format]/

Where:

- [protocol] is the protocol being used, in this case and for the moment only “rest” is supported.

- [return format] is the format you want your information returned in, either “php”, “json” or “xml”.

This endpoint should then be passed the method and any parameters as GET variables, so for example:

http://yoursite.com/pg/api/rest/xml/?method=test.test&myparam=foo&anotherparam=bar

Would pass “foo” and “bar” as the given named parameters to the function “test.test” and return the result in XML format.

Notice here also that the API uses the “PG” page handler extension, this means that it would be a relatively simple matter to add a new API protocol or replace the entire API subsystem in a module – should you be so inclined.

Return result

The result of the api call will be an entity encoded in your chosen format.

This entity will have a “status” parameter – zero for success, non-zero denotes an error. Result data will be in the “result” parameter. You may also receive some messages and debug information.

Exporting a function

Any Elgg function – core or module – can be exposed via the API, all you have to do is declare it using expose_function() from within your code, passing the method name, handler and any parameters (note that these parameters must be declared in the same order as they appear in your function).

Listing functions

You can see a list of all registered functions using the built in api command “system.api.list”, this is also a useful test to see if your client is configured correctly.

E.g.

http://yoursite.com/pg/api/rest/xml/?method=system.api.list

Authorising and authenticating

Most commands will require some form of authorisation in order to function. There are two main types of authorisation – protocol level which determines whether a given client is permitted to connect, and user level where a command whereby a user requires a special token in lieu of a username and password.

Protocol level authentication

Protocol level authentication is a way to ensure that commands only come from approved clients for which you have previously given keys. This is in keeping with many web based API systems and permits you to disconnect clients who abuse your system, or track usage for accountancy purposes.

The client must send a HMAC signature together with a set of special HTTP headers when making a call. This ensures that the API call is being made from the stated client and that the data has not been tampered with.

Eagle-eyed readers with long memories will see a lot of similarity with the ElggVoices API I wrote about previously.

The HMAC must be constructed over the following data:

- The Secret Key provided by the target Elgg install (as provided easily by the APIAdmin plugin).

- The current unix time in microseconds as a floating point decimal, produced my microtime(true).

- Your API key identifying you to the Elgg api server (companion to your secret key).

- URLEncoded string representation of any GET variable parameters, eg “method=test.test&foo=bar”

- If you are sending post data, the hash of this data.

Some extra information must be added to the HTTP header in order for this data to be correctly processed:

- X-Elgg-apikey – The API key (not the secret key!)

- X-Elgg-time – Microtime used in the HMAC calculation

- X-Elgg-hmac – The HMAC as hex characters.

- X-Elgg-hmac-algo – The algorithm used in the HMAC calculation – eg, sha1, md5 etc

If you are sending POST data you must also send:

- X-Elgg-posthash – The hash of the POST data.

- X-Elgg-posthash-algo – The algorithm used to produce the POST data hash – eg, md5.

- Content-type – The content type of the data you are sending (if in doubt use

application/octet-stream). - Content-Length – The length in bytes of your POST data.

Much of this will be handled for you if you use the built in Elgg API Client.

User level tokens

User level tokens are used to identify a specific user on the target system, in much the same way as if they were to log in with their user name and password, but without the need to send this for every API call.

Tokens are time limited, and so it will be necessary for your client to periodically refresh the token they use to identify the user.

Tokens are generated by using the API command “auth.gettoken” and passing the username and password as parameters, eg:

http://yoursite.com/pg/api/rest/xml/?method=auth.gettoken&username=foo&password=bar

Anonymous methods

Anonymous methods (such as “system.api.list”) can be executed without any form of authentication, thus accepting connections from any client and regardless of whether they provide a user token. This is useful in certain situations and it goes without saying that you don’t expose sensitive functionality this way.

To do so set $anonymous=true in your call to expose_function().

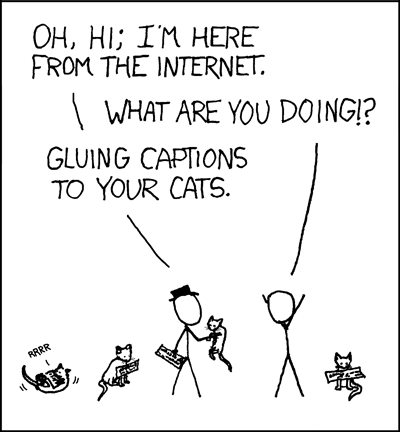

Image “In UR Reality” by XKCD